TRILLapalooza

April 28, 2012 5 Comments

If there’s one thing people lament in the routing and switching world, it’s the spanning tree protocol and the way Ethernet forwarding is done (or more specifically, it’s limitations). I’ve made my own lament last year (don’t cross the streams), and it’s come up recently in Ivan Pepelnjak’s blog. Even server admins who’ve never logged into a switch in their lives know what spanning-tree is: It is the destroyer of uptime, causer of the Sev1 events, a pox among switches.

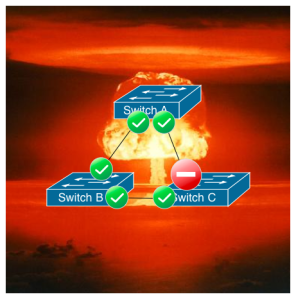

I am become spanning-tree, destroyer of networks

The root of the problem is the way Ethernet forwarding is done: There can’t be more than one path for an Ethernet frame to take from a source MAC to a destination MAC. This basic limitation has not changed for the past few decades.

And yet, for all the outages, all of the configuration issues and problems spanning-tree has caused, there doesn’t seem to be much enthusiasm for the more fundamentals cures: TRILL (and the current proprietary implementations), SPB, QFabric, and to a lesser extent OpenFlow (data center use cases), and others. And although the OpenFlow has been getting a lot of hype, it’s more because VCs are drooling over it than from its STP-slieghing ways.

For a while in the early 2000s, it looked like we might get rid of it for the most part. There was a glorious time when we started to see multi-layer switches that could route Layer 3 as fast as they could switch Layer 2, giving us the option of getting rid spanning-tree entirely. Every pair of switches, even at the access layer, would be it’s own Layer 3 domain. Everything was routed to everywhere, and the broadcast domains were very small so there wasn’t a possibility for Ethernet to take multiple paths. And with Layer 3 routing, multi-pathing was easy through ECMP. Convergence on a failed link was way faster than spanning tree.

Then virtualization came, and screwed it all up. Now Layer 3 wasn’t going to work for a lot o the workloads, and we needed to build huge Layer 2 networks. Although some non-virtualization uses, the Layer-3 everywhere solution works great. To take a look at a wonderfully multi-path, high bandwidth environment, check out Brad Hedlund’s own blog entry on creating a Hadoop super network with shit-tons of bandwidth out of 10G and 40G low latency ports.

Overlays

Which brings me to overlays. There are some that propose overlay networks, such as VXLAN, NVGRE, and Nicira as solutions to the Ethernet multipathing problem (among other problems). An overlay technology like VXLAN not only brings us back to the glory days of no spanning-tree by routing to the access layer, but solves another issue that plagues large scale deployments: 4000+ VLANs ain’t enough. VXLAN for instance has a 24-bit identifier on top of the normal 12-bit 802.1Q VLAN identifier, so that’s 236 separate broadcast domains, giving us the ability to support 68,719,476,736 VLANs. Hrm.. that would be….

While I like (and am enthusiastic) about overlay technologies in general, I’m not convinced they are the final solution we need for Ethernet’s current forwarding limitations. Building an overlay infrastructure (at least right now) is a more complicated (and potentially more expensive) prospect than TRILL/SPB, depending on how you look at it. Also availability is an issue currently (likely to change, of course), since NVGRE has no implementations I’m aware of, and VXLAN only has one (Cisco’s Nexus 1000v). Also, VXLAN doesn’t terminate into any hardware currently, making it difficult to put in load balancers and firewalls that aren’t virtual (as mentioned in the Packet Pusher’s VXLAN podcast).

Of course, I’m afraid TRILL doesn’t have it much better in the way of availability. Only two vendors that I’m aware of ship TRILL-based products, Brocade with VCS and Cisco with FabricPath, and both FabricPath and VCS only run on a few switches out of their respective vendor’s offerings. As has often been discussed (and lamented), TRILL has a new header format, new silicon is needed to implement TRILL (or TRILL-based) offerings in any switch. So sadly it’s not just a matter of adding new firmware, the underlying hardware needs to support it too. For instance, the Nexus 5500s from Cisco can do TRILL (and the code has recently been released) while the Nexus 5000 series cannot.

It had been assumed that the vendors that use merchant silicon for their switches (such as Arista and Dell Force10) couldn’t do TRILL, because the merchant silicon didn’t. Turns out, that’s not the case. I’m still not sure which chips from the merchant vendors can and can’t do TRILL, but the much ballyhooed Broadcom Trident/Trident+ chipset (BCM56840 I believe, thanks to #packetpushers) can do TRILL. So anything built on on Trident should be able to do TRILL. Which right now is a ton of switches. Broadcom is making it rain Tridents right now. The new Intel/Fulcrum chipsets can do TRILL as well I believe.

And TRILL though does have the advantage of boing stupid easy. Ethan Banks and I were paired up during NFD2 at Brocade, and tasked with configuring VCS (built on pre-standard TRILL). It took us 5 minutes and just a few commands. FabricPath (Cisco’s pre-standard implementation built on TRILL) is also easy: 3 commands. If you can’t configure FabricPath, you deserve the smug look you get from Smug Cisco Guy. Here is how you turn on FabricPath on a Nexus 7K:

switch# config terminal switch(config)# feature-set fabricpath switch(config)# mac address learning-mode conversational vlan 1-10

Non-overlay solutions to STP without TRILL/SPB/QFabirc/etc. include MLAG (commonly known as Cisco’s trademarked term Etherchannel) and MC-LAG (Multi-chassis Link Aggregration), also known as VLAG, vPC, VSS depending on the vendor. They also provide multi-pathing in a sense that while there are multiple active physical paths, no single flow will have more than one possible path, providing both redundancy and full link utilization. But it’s all manually configured at each link, and not nearly as flexible (or easy) as TRILL to instantiate. MLAG/MC-LAG can provide simple multi-path scenarios, while TRILL is so flexible, you can actually get yourself into trouble (as Ivan has mentioned here). So while MLAG/MC-LAG work as workarounds, why not just fix what they workaround? It would be much simpler.

Vendor Lock-In or FUD?

Brocade with VCS and Cisco with FabricPath are currently proprietary implementations of TRILL, and won’t work with each other or any other version of TRILL. The assumption is that when TRILL becomes more prevalent, they will have standards-based implementations that will interoperate (Cisco and Brocade have both said they will). But for now, it’s proprietary. Oh noes! Some vendors have decried this as vendor lock-in, but I disagree. For one, you’re not going to build a multi-vendor fabric, like staggering two different vendors every other rack. You might not have just one vendor amongst your networking gear, but your server switch blocks, core/aggregation, and other such groupings of switches are very likely to be single vendor. Every product has a “proprietary boundary” (new term! I made it!). Even token ring, totally proprietary, could be bridged to traditional Ethernet networks. You can also connect your proprietary TRILL fabrics to traditional STP domains at the edge (although there are design concerns as Ivan Pepelnjak has noted).

QFabric will never interoperate with another vendor, that’s their secret sauce (running on Broadcom Trident+ if the rumors are to be believed). Still, QFabric is STP-less, so I’m a fan. And like TRILL, it’s easy. My only complaint about QFabric right now is that it requires a huge port count (500+ 10 Gbit ports) to make sense (so does Nexus 7000K with TRILL, but you can also do 5500s now). Interestingly enough, Juniper’s Anjan Venkatramani did a hit piece on TRILL, but the joke is on them because it’s on tech target behind a register-wall, so no one will read it.

So far, the solutions for Ethernet forwarding are as follows: Overlay networks (may be fantastic for large environments, though very complex), Layer 3 everywhere (doable, but challenges in certain environments), and MLAG/MCAG (tough to scale, manual configuration but workable). All of that is fine. I’ve nothing against any of those technologies. In fact, I’m getting rather excited about VXLAN/Nicira overlays. I still think we should fix Layer 2 forwarding with TRILL, SPB, or something like it. And while even if every vendor went full bore on one standard, it would be several years before we were able to totally rid spanning-tree in our networks.

But wouldn’t it be grand?

Further resources on TRILL (and where to feast on brains)

Hi Tony,

I tend to agree with your general position that there will be a place for TRILL in cloud data centers. There will be a market for TRILL created by those choosing to stick with pure L2 physical networks (shunning Overlays) and looking to make that environment work better. The size of that market however remains to be seen, but It’ll be there (I think). In fact, I think the scale-out Leaf/Spine architecture running TRILL for the East-West fabric is an interesting play that I’ve been think about a lot lately.

As for the notion that the physical network will generally be one vendor, that may be true in most cases, but with pure TRILL it doesn’t have to be that way. We may very well see customers bidding out the Top of Rack and Spine layer separately. In fact, some large cloud data centers are already doing that today.

Good post. And Thanks for the link!!

Cheers,

Brad

I don’t know that data centers will shun overlays, as in refuse to use them. I think that it may just not make sense to set up and overlay in terms of cost/complexity.

I like TRILL because it greatly simplifies the Layer 2 issues in the data center and the campus.

Of course, time will tell.

I’m not sure the “cost/complexity” is actually there. If you look at Rackspace, a company where infrastructure cost directly affects the bottom line, they just publicly announced they’re using Overlays in production — and cited that making such a solution simplifies the network.

VXLAN is supported in the OVS, not just N1Kv. While I like the concept of TRILL for the vast majority of customers MLAG (multi-chassis channeling) is a valid and scalable solution – which gives TRILL time to mature or even better the applications to address the restrictions they have imposed…

Tony, quoting Oppenheimer’s Bhagavad Gita and adapting it (but not that much) to spanning tree made my day 🙂 keep up !

Tom