Ethernet Congestion: Drop It or Pause It

February 2, 2013 5 Comments

Congestion happens. You try to put a 10 pound (soy-based vegan) ham in a 5 pound bag, it just ain’t gonna work. And in the topsy-turvey world of data center switches, what do we do to mitigate congestion? Most of the time, the answer can be found in the wisdom of Snoop Dogg/Lion.

Of course, when things are fine, the world of Ethernet is live and let live.

We’re fine. We’re all fine here now, thank you. How are you?

But when push comes to shove, frames get dropped. Either the buffer fills up and tail drop occurs, or QoS is configured and something like WRED (Weight Random Early Detection) kicks in to proactively drop frames before taildrop can occur (mostly to keep TCP’s behavior from causing spiky behavior).

The Bit Grim Reaper is way better than leaky buckets

Most congestion remediation methods involve one or more types of dropping frames. The various protocols running on top of Ethernet such as IP, TCP/UDP, as well as higher level protocols, were written with this lossfull nature in mind. Protocols like TCP have retransmission and flow control, and higher level protocols that employ UDP (such as voice) have other ways of dealing with the plumbing gets stopped-up. But dropping it like it’s hot isn’t the only way to handle congestion in Ethernet:

Please Hammer, Don’t PAUSE ‘Em

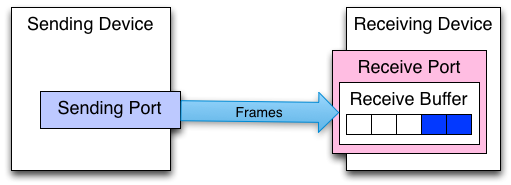

Ethernet has the ability to employ flow control on physical interfaces, so that when congestion is about to occur, the receiving port can signal to the sending port to stop sending for a period of time. This is referred to simply as 802.3x Ethernet flow control, or as I like to call it, old-timey flow control, as it’s been in Ethernet since about 1997. When a receive buffer is close to being full, the receiving side will send a PAUSE frame to the sending side.

Too legit to drop

A wide variety of Ethernet devices support old-timey flow control, everything from data center switches to the USB dongle for my MacBook Air.

One of the drawbacks of old-timey flow control is that it pauses all traffic, regardless of any QoS considerations. This creates a condition referred to as HoL (Head of Line) blocking, and can cause higher priority (and latency sensitive) traffic to get delayed on account of lower priority traffic. To address this, a new type of flow control was created called 802.1Qbb PFC (Priority Flow Control).

PFC allows a receiving port send PAUSE frames that only affect specific CoS lanes (0 through 7). Part of the 802.1Q standard is a 3-bit field that represents the Class of Service, giving us a total of 8 classes of service, though two are traditionally reserved for control plane traffic so we have six to play with (which, by the way, is a lot simpler than the 6-bit DSCP field in IP). Utilizing PFC, some CoS values can be made lossless, while others are lossfull.

Why would you want to pause traffic instead of drop traffic when congestion occurs?

Much of the IP traffic that traverses our data centers is OK with a bit of loss. It’s expected. Any protocol will have its performance degraded if packet loss is severe, but most traffic can take a bit of loss. And it’s not like pausing traffic will magically make congestion go away.

But there is some traffic that can benefit from losslessness, and and that just flat out requires it. FCoE (Fibre Channel of Ethernet), a favorite topic of mine, requires losslessness to operate. Fibre Channel is inherently a lossless protocol (by use of B2B or Buffer to Buffer credits), since the primary payload for a FC frame is SCSI. SCSI does not handle loss very well, so FC was engineered to be lossless. As such, priority flow control is one of the (several) requirements for a switch to be able to forward FCoE frames.

iSCSI is also a protocol that can benefit from pause congestion handling rather than dropping. Instead of encapsulating SCSI into FC frames, iSCSI encapsulates SCSI into TCP segments. This means that if a TCP segment is lost, it will be retransmitted. So at first glance it would seem that iSCSI can handle loss fine.

From a performance perspective, TCP suffers mightily when a segment is lost because of TCP congestion management techniques. When a segment is lost, TCP backs off on its transmission rate (specifically the number of segments in flight without acknowledgement), and then ramps back up again. By making the iSCSI traffic lossless, packets will be slowed down during congestions but the TCP congestion algorithm wouldn’t be used. As a result, many iSCSI vendors recommend turning on old-timey flow control to keep packet loss to a minimum.

However, many switches today can’t actually do full losslessness. Take the venerable Catalyst 6500. It’s a switch that would be very common in data centers, and it is a frame murdering machine.

The problem is that while the Catalyst 6500 supports old-timey flow control (it doesn’t support PFC) on physical ports, there’s no mechanism that I’m aware of to prevent buffer overruns from one port to another inside the switch. Take the example of two ingress Gigabit Ethernet ports sending traffic to a single egress Gigabit Ethernet port. Both ingress ports are running at line rate. There’s no signaling (at least that I’m aware of, could be wrong) that would prevent the egress ports from overwhelming the transmit buffer of the ingress port.

Many frames enter, not all leave

This is like flying to Hawaii and not reserving a hotel room before you get on the plane. You could land and have no place to stay. Because there’s no way to ensure losslessness on a Catalyst 6500 (or many other types of switches from various vendors), the Catalyst 6500 is like Thunderdome. Many frames enter, not all leave.

Catalyst 6500 shown with a Sup2T

The new generation of DCB (Data Center Bridging) switches, however, use a concept known as VoQ (Virtual Output Queues). With VoQs, the ingress port will not send a frame to the egress port unless there’s room. If there isn’t room, the frame will stay in the ingress buffer until there’s room.If the ingress buffer is full, it can have signaled the sending port it’s connected to to PAUSE (either old-timey pause or PFC).

This is a technique that’s been in used in Fibre Channel switches from both Brocade and Cisco (as well as others) for a while now, and is now making its way into DCB Ethernet switches from various vendors. Cisco’s Nexus line, for example, make use of VoQs, and so do Brocade’s VCS switches. Some type of lossless ability between internal ports is required in order to be a DCB switch, since FCoE requires losslessness.

DCB switches require lossless backplanes/internal fabrics, support for PFC, ETS (Enhanced Transmission Selection, a way to reserve bandwidth on various CoS lanes), and DCBx (a way to communicate these capabilities to adjacent switches). This makes them capable of a lot of cool stuff that non-DCB switches can’t do, such as losslessness.

One thing to keep in mind, however, is when Layer 3 comes into play. My guess is that even in a DCB switch that can do Layer 3, losslessness can’t be extended beyond a Layer 2 boundary. That’s not an issue with FCoE, since it’s only Layer 2, but iSCSI can be routed.

Nicely explained. Arista’s also have output queues. Juniper EX does not.

Great write-up and very entertaining. Thanks for the explanation!!!

i have question here in what sense ethernet provide congestion control and in what sense ethernet provide

Pingback: How do Node.js Streams work? - Tutorial Guruji

Pingback: How do Node.js Streams work? - Code Solution