Health Checking On Load Balancers: More Art Than Science

March 6, 2012 8 Comments

One of the trickiest aspects of load balancing (and load balancing has lots of tricky aspects) is how to handle health checking. Health checking is of course the process where by the load balancer (or application delivery controller) does periodic checks on the servers to make sure they’re up and responding. If a server is down for any reason, the load balancer should detect this and stop sending traffic its way.

Pretty simple functionality, really. Some load balancers call it keep-alives or other terms, but it’s all the same: Make sure the server is still alive.

One of the misconceptions about health checking is that it can instantly detect a failed server. It can’t. Instead, a load balancer can detect a server failure within a window of time. And that window of time is dependent upon a couple of factors:

- Interval (how often is the health check performed)

- Timeout (how often does the load balancer wait before it gives up)

- Count (some load balancers will try several times before marking a server as “down”)

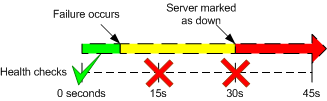

As an example, take a very common interval setting of 15 seconds, a timeout of 5 seconds, and a count of 2. If I took a shotgun to a server (which would ensure that it’s down), how long would it take the load balancer to detect the failure?

In the worst case scenario for time to detection, the failure occurred right after that last successful health check, so that would be about 14 seconds before the first failure was even detected. The health check fails once, so we wait another 15 seconds before the second health check. Now that’s two down, and we’ve got a server marked as down.

So that’s about 29 seconds at a worst case scenario, or 16 seconds on a best case scenario. Sometimes server administrators hear that and want you to tune the variables down, so they can detect a failure quicker. However, that’s about as low as they go.

If you set the interval for more than 15 seconds, depending on the load balancer, it can unduly burden the control plane processor with all those health checks. This is especially true if you have hundreds of servers in your server farm. You can adjust the count down to 1, which is common, but remember a server would be marked down on just a single health check failure.

I see you have failed a single health check. Pity.

The worst value to tune down, however, is the timeout value. I had a client once tell me that the load balancer was causing all sorts of performance issues in their environment. A little bit of investigating, and it turned out that they had set the timeout value to 1 second. If a server didn’t come up with the appropriate response to the health check in 1 second, the server would be marked down. As a result, every server in the farm was bouncing up and down more than a low-rider in a Dr Dre video.

As a result, users where being bounced from one server to another, with lots of TCP RSTs and re-logging in (the application was stateful, requiring users being tied to a specific server to keep their session going). Also, when one server took 1.1 seconds to respond, it was taken out of rotation. The other servers would have to pick up the slack, and thus had more load. It wasn’t long before one of them took more than a second to respond. And it would cascade over and over again.

When I talked to the customer about this, they said they wanted their site to be as fast as possible, so they set the timeout very low. They didn’t want users going onto a slow server. A noble aspiration, but the wrong way to accomplish that goal. The right way would be to add more servers. We tweaked the timeout value to 5 seconds (about as low as I would set it), and things calmed down considerably. The servers were much happier.

So tweaking those knobs (interval, timeout, count) are always a compromise between detecting a server failure quickly, and giving a server a decent chance to respond as well as not overwhelming the control plane. As a result, it’s not an exact science. Still, there are guidelines to keep in mind, and if you set the expectations correctly, the server/application team will be a lot happier.

Thanks for the post! You bring up some excellent points. I think another portion that needs to be considered, in addition to what you outlined above, is the quality of the health check. Certain health-checks may be more appropriate than another, depending on application and deployment. For instance, tcp half-opens are likely less reliable/helpful with web applications, where the socket may respond but the back-end application component may not. Content checks (ex. HTTP GET) may be better served in this scenario. These are the situations where ADC/LB focused engineers really get to show their artsiness, to borrow your term.

Also remember that the higher you go up layers the more resource to consume on bith the ADC and the Server. .E.g L7 health checks with HTTP get and a low (short) timeout and a low interval can be the tipping point to take the server out of rotation.

To be a bit Ivan about it – the intervals are probably a bit longer, as you have to take the “timeout” parameter in account. The worst case (unless I misunderstood you) would be interval * retry + timeout.

Pingback: Internets of Interest for 8th March 2012 — My EtherealMind

so what about in-band checks? Normal health monitors would than only be used to bring up the server, won’t they?

Pingback: Getting probed. The ACE Cisco way! « Cisco Inferno

I think Ivan mentions it above but wouldn’t the time be

Server fails 1 second after last successful health check so we wait 14 seconds for the next.

A = 14 seconds.

At the next health check we probe and wait 5 seconds for a reply.

B= 5 seconds

Probe fails. We wait another 15 seconds.

C= 15 seconds.

We probe again and wait 5 seconds for a reply.

D = 5 seconds.

Second probe fails so server is declared down.

Failure detection time = A+B+C+D = 39 seconds.

Jack

Thanks for article. Just correct your times.

It’s 5 (timeout) + 15 (check interval) + 5(timeout) = 25 seconds best scenerio, when server goes down lets say 1 milisecond before balancer send request.

And 25 + 15 (timeout) worst case scenario, when server goes down lets say 1 milisecond after last check 😉