Jan 11th, 2016: New Year! Also, there was a comment below about adding -sha256 to the signing (both self-signed and CSR signing) since browsers are starting to reject SHA1. Added (I ran through a test, it worked out for me at least).

November 18th, 2015: Oops! A few have mentioned additional errors that I missed. Fixed.

July 11th, 2015: There were a few bugs in this article that went unfixed for a while. They’ve been fixed.

SSL (or TLS if you want to be super totally correct) gives us many things (despite many of the recent shortcomings).

- Privacy (stop looking at my password)

- Integrity (data has not been altered in flight)

- Trust (you are who you say you are)

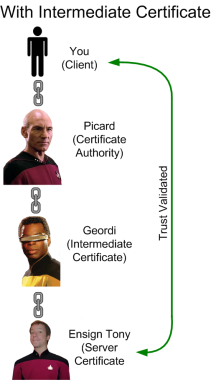

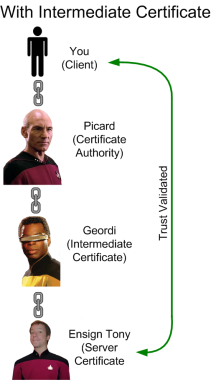

All three of those are needed when you’re buying stuff from say, Amazon (damn you, Amazon Prime!). But we also use SSL for web user interfaces and other GUIs when administering devices in our control. When a website gets an SSL certificate, they typically purchase one from a major certificate authority such as DigiCert, Symantec (they bought Verisign’s registrar business), or if you like the murder of elephants and freedom, GoDaddy. They range from around $12 USD a year to several hundred, depending on the company and level of trust. The benefit that these certificate authorities provide is a chain of trust. Your browser trusts them, they trust a website, therefore your browser trusts the website (check my article on SSL trust, which contains the best SSL diagram ever conceived).

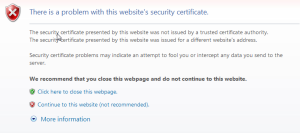

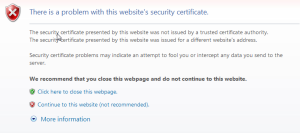

Your devices, on the other hand, the ones you configure and only your organization accesses, don’t need that trust chain built upon the public infrastrucuture. For one, it could get really expensive buying an SSL certificate for each device you control. And secondly, you set the devices up, so you don’t really need that level of trust. So web user interfaces (and other SSL-based interfaces) are almost always protected with self-signed certificates. They’re easy to create, and they’re free. They also provide you with the privacy that comes with encryption, although they don’t do anything about trust. Which is why when you connect to a device with a self-signed certificate, you get one of these:  So you have the choice, buy an overpriced SSL certificate from a CA (certificate authority), or get those errors. Well, there’s a third option, one where you can create a private certificate authority, and setting it up is absolutely free.

So you have the choice, buy an overpriced SSL certificate from a CA (certificate authority), or get those errors. Well, there’s a third option, one where you can create a private certificate authority, and setting it up is absolutely free.

OpenSSL

OpenSSL is a free utility that comes with most installations of MacOS X, Linux, the *BSDs, and Unixes. You can also download a binary copy to run on your Windows installation. And OpenSSL is all you need to create your own private certificate authority. The process for creating your own certificate authority is pretty straight forward:

- Create a private key

- Self-sign

- Install root CA on your various workstations

Once you do that, every device that you manage via HTTPS just needs to have its own certificate created with the following steps:

- Create CSR for device

- Sign CSR with root CA key

You can have your own private CA setup in less than an hour. And here’s how to do it.

Create the Root Certificate (Done Once)

Creating the root certificate is easy and can be done quickly. Once you do these steps, you’ll end up with a root SSL certificate that you’ll install on all of your desktops, and a private key you’ll use to sign the certificates that get installed on your various devices.

Create the Root Key

The first step is to create the private root key which only takes one step. In the example below, I’m creating a 2048 bit key:

openssl genrsa -out rootCA.key 2048

The standard key sizes today are 1024, 2048, and to a much lesser extent, 4096. I go with 2048, which is what most people use now. 4096 is usually overkill (and 4096 key length is 5 times more computationally intensive than 2048), and people are transitioning away from 1024. Important note: Keep this private key very private. This is the basis of all trust for your certificates, and if someone gets a hold of it, they can generate certificates that your browser will accept. You can also create a key that is password protected by adding -des3:

openssl genrsa -des3 -out rootCA.key 2048

You’ll be prompted to give a password, and from then on you’ll be challenged password every time you use the key. Of course, if you forget the password, you’ll have to do all of this all over again.

The next step is to self-sign this certificate.

openssl req -x509 -new -nodes -key rootCA.key -sha256 -days 1024 -out rootCA.pem

This will start an interactive script which will ask you for various bits of information. Fill it out as you see fit.

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [AU]:US

State or Province Name (full name) [Some-State]:Oregon

Locality Name (eg, city) []:Portland

Organization Name (eg, company) [Internet Widgits Pty Ltd]:Overlords

Organizational Unit Name (eg, section) []:IT

Common Name (eg, YOUR name) []:Data Center Overlords

Email Address []:none@none.com

Once done, this will create an SSL certificate called rootCA.pem, signed by itself, valid for 1024 days, and it will act as our root certificate. The interesting thing about traditional certificate authorities is that root certificate is also self-signed. But before you can start your own certificate authority, remember the trick is getting those certs in every browser in the entire world.

Install Root Certificate Into Workstations

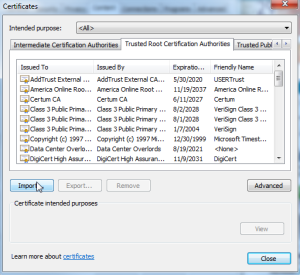

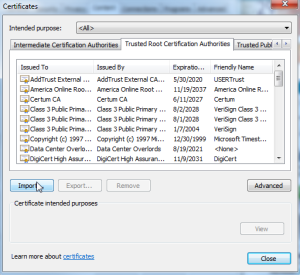

For you laptops/desktops/workstations, you’ll need to install the root certificate into your trusted certificate repositories. This can get a little tricky. Some browsers use the default operating system repository. For instance, in Windows both IE and Chrome use the default certificate management. Go to IE, Internet Options, go to the Content tab, then hit the Certificates button. In Chrome going to Options and Under The Hood, and Manage certificates. They both take you to the same place, the Windows certificate repository. You’ll want to install the root CA certificate (not the key) under the Trusted Root Certificate Authorities tab.  However, in Windows Firefox has its own certificate repository, so if you use IE or Chrome as well as Firefox, you’ll have to install the root certificate into both the Windows repository and the Firefox repository. In a Mac, Safari, Firefox, and Chrome all use the Mac OS X certificate management system, so you just have to install it once on a Mac. With Linux, I believe it’s on a browser-per-browser basis.

However, in Windows Firefox has its own certificate repository, so if you use IE or Chrome as well as Firefox, you’ll have to install the root certificate into both the Windows repository and the Firefox repository. In a Mac, Safari, Firefox, and Chrome all use the Mac OS X certificate management system, so you just have to install it once on a Mac. With Linux, I believe it’s on a browser-per-browser basis.

Create A Certificate (Done Once Per Device)

Every device that you wish to install a trusted certificate will need to go through this process. First, just like with the root CA step, you’ll need to create a private key (different from the root CA).

openssl genrsa -out device.key 2048

Once the key is created, you’ll generate the certificate signing request.

openssl req -new -key device.key -out device.csr

You’ll be asked various questions (Country, State/Province, etc.). Answer them how you see fit. The important question to answer though is common-name.

Common Name (eg, YOUR name) []: 10.0.0.1

Whatever you see in the address field in your browser when you go to your device must be what you put under common name, even if it’s an IP address. Yes, even an IP (IPv4 or IPv6) address works under common name. If it doesn’t match, even a properly signed certificate will not validate correctly and you’ll get the “cannot verify authenticity” error. Once that’s done, you’ll sign the CSR, which requires the CA root key.

openssl x509 -req -in device.csr -CA rootCA.pem -CAkey rootCA.key -CAcreateserial -out device.crt -days 500 -sha256

This creates a signed certificate called device.crt which is valid for 500 days (you can adjust the number of days of course, although it doesn’t make sense to have a certificate that lasts longer than the root certificate). The next step is to take the key and the certificate and install them in your device. Most network devices that are controlled via HTTPS have some mechanism for you to install. For example, I’m running F5’s LTM VE (virtual edition) as a VM on my ESXi 4 host. Log into F5’s web GUI (and should be the last time you’re greeted by the warning), and go to System, Device Certificates, and Device Certificate.  In the drop down select Certificate and Key, and either past the contents of the key and certificate file, or you can upload them from your workstation.

In the drop down select Certificate and Key, and either past the contents of the key and certificate file, or you can upload them from your workstation.

After that, all you need to do is close your browser and hit the GUI site again. If you did it right, you’ll see no warning and a nice greenness in your address bar.

And speaking of VMware, you know that annoying message you always get when connecting to an ESXi host?

You can get rid of that by creating a key and certificate for your ESXi server and installing them as /etc/vmware/ssl/rui.crt and /etc/vmware/ssl/rui.key.